How to Make *Space* for Robots

#INVESTIGATION | Billions in investment are flowing into the development of humanoids, but who is preparing actual humans for the psychological threat & existential stress? A few thoughts...

Morgan Stanley reported this month that 8 million humanoids are expected to share our soil by 2040; with that number reaching 63 million by 2050. Elon Musk is even more bullish predicting that our robotic neighbors will eventually outflank us at a rate of 2:1.

As with much talk of AI innovation today, the focus is usually on investment and technology with only the occasional existential musing thrown in for good measure. But the practical and psychological impact of humanoids joining out world should be addressed now (and more high EQ thinkers should be involved in humanoid development and discussion, but that’s a topic for another day…).

Robot vs. Humanoid

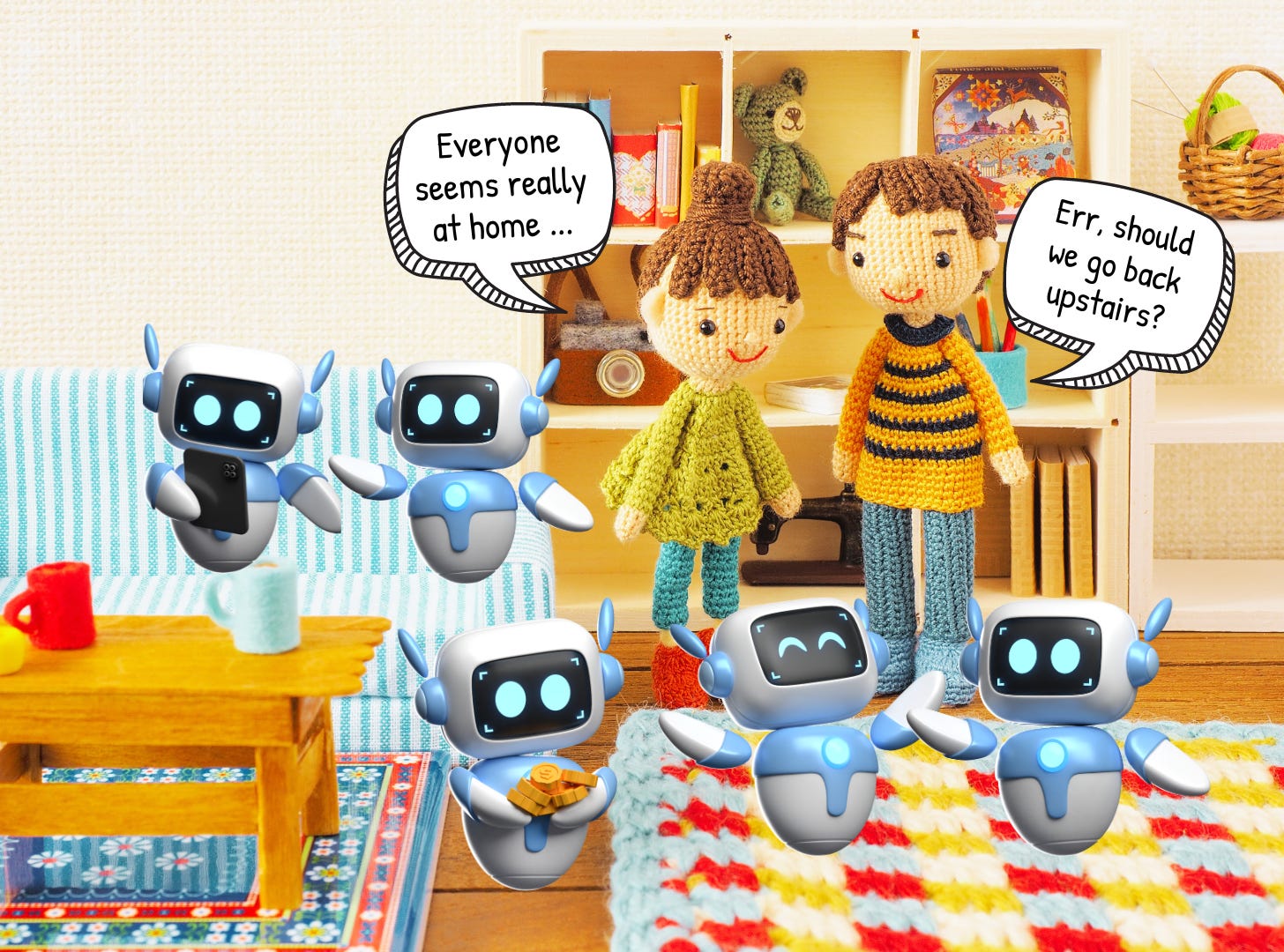

A humanoid is a robot that is built to resemble humans. It’s a distinction that is particularly meaningful in understanding some of the more immediate issues, particularly for our kids. Because we’re not just talking about an extra pair-of-hands as it were, but instead pressure on our psyche to make room for a presence that is not human but mimics our humanity. It will be overwhelming, confusing, and introduce a long list of moral, societal, behavioral quandaries to sort out as well.

We’ve been conditioned as a society to think of technology as being cold, mathematical, hard-to-understand, and of a realm led by hoodie-wearing young men. It’s a fallacy that will come to haunt us, frankly, if we ignore that the biggest invention of our lifetimes will look and act like us.

Addressing the Stress to Our Psyche

There are, of course, many great reasons why investment is flowing into the development of humanoids, including the number of open factory jobs right now and the possibility to protect actual humans from dangerous work, or from war, etc.

But it’s also clear that while one sector is moving ahead the rest of us are more focused on Taylor Swift than technological innovation (…she’s amazing and it’s not any of our fault per se). Enter the term “humanoid” and scroll through Google News and you’ll need to dig into Interesting Engineering, the Robot Report, CNBC or maybe Venture Capital Journal to understand what’s going on. This is certainly a red flag if we are looking at, even conservatively, the population of New York City in robots just ~16 years from now.

It probably will take a decade for us to mentally prepare for humanoid neighbors and even now, with their chatbot precursors, we can prepare. A few ideas:

Consider that Chatbots are Gateway Humanoids

Hopefully you are starting to use chatbots such as ChatGPT. It’s very important to get used to these tools for many reasons… The first, of course, is that they are taking over literally and metaphorically and we need to find comfort with how they work and what they can do. But also, and far less discussed, is starting to square how chatbots mess with us emotionally (and especially the voice-activated versions).

For instance, you may feel fearful of asking a “wrong” question. Or perhaps “embarrassed” for possibly sounding “stupid.” Or maybe you find it hard to admit that you don’t even know where to start (remember, it’s just a Google search on steroids… start with any question at all).

That said, while our feelings when using these tools are normal there is nothing “normal” at all about the situation. These chatbots are not programmed to relate to us emotionally, nor of course, in any manner close to sentience. Think of it merely as a fake veneer slapped onto a perfectly perfunctory tool.

The conundrum is explained well by Shannon Vallor, professor of AI and data ethics at the University of Edinburgh and director of the Center for Technomoral Futures in the Edinburgh Futures Institute (as told recently to Techpolicy.press) ⤵️

I think where we're at today is actually quite a dangerous place because we have in many ways an often seamless appearance of human-like agency because of the language capability of these systems. And yet in some respects, these tools are no more like human agents than old style chatbots or calculators. They don't have consciousness, they don't have sentience, they don't have emotion or feeling or a literal experience of the world, but they can say that they do and that they feel things, want things, that they want to help us, for example. And they can do so in such convincing ways that it will be really unnatural feeling for people to treat them as mere tools.

Understand the (Already Here) Risks to Kids

Feeling a bit sheepish is not the biggest risk to our kiddos, it’s the risk of coercion or the chance they will seek comfort and solace in any non-human, but human-sounding, tool.

We’ve all been young, emotional and even lonely, at some point in our young lives. Imagine if you had a “friend” that was always there and told you what you wanted to hear? It’s alarming, really.

Unfortunately it’s also here and integrated into every platform our kids use, Google search, Snapchat, Instagram, Facebook, X… it’s here. It’s everywhere and not just gated within, say, ChatGPT.

Chatbots also do not need to be operated by bad actors for emotional attachment or coercion to possible. But we do need to consider what would happen if a bad actor did weaponize these tools against kids too. It’s like peer pressure on steroids and should be the subject of much more coverage than it is (which is nearly nothing).

Mitigating the risk is really as simple as using theses tools together as a family, talking honestly about how they make us feel (adults too), and working out a plan to avoid the risk of emotionally attachment.

Have a Point-of-View

It’s frankly quite frustrating how lacking in empowerment most of us feel. This technology is a replication of our very being and so by design we are all experts.

We are all experts on being human, so let’s act like it.

The path to a better future starts with all of us weighing in and starting to consider what we think is best. There will come a point where the coexistence of humanoids will be legislated in some part and by then but you need to be ready to voice your opinion and vote for what YOU feel is right.

Think of all of the questions we’ll be asking ourselves from liability (if a robot goes rogue is it the owner of the software, the hardware, or maybe the individual or company owner of the humanoid who is liable?) to psychological risk and even mundane topics such as storage (ummm, where do 8 million or 64 million robots go when not in use?). It’s not fantastical to chat about all of this today with your neighbors, family, colleagues and friends.

Consider Safety Seriously

The other downside by allowing Big Tech to “figure this all out” right now is trusting these companies with our safety. The rallying cry of the tech industry in the dotcom days was “go fast and break things.” The idea is you must try and fail and try again. But this idea takes on a entire new meaning when the potential collateral damage is us.

Now this doesn’t mean we face obvious and intentional danger; hopefully laws and liability still cover much we can foresee in the years ahead. But there is a lot that will be remain unknown about risks and it’s prudent to be cautious. Just think of what’s gone bad already when self-driving cars have failed in real world environments.

We don’t want to stifle innovation but we shouldn’t be guinea pigs either; so we must treat all innovation occurring as our business and with consideration to our safety not how much money Elon Musk will make per humanoid (and at a 2:1 rate..)